Welcome to Llumen

Why Llumen?

Most self-hosted AI interfaces are built for servers, not devices. They're powerful but demand heavy resources and hours of configuration.

Llumen carves out a different space: privacy without the complexity. You get the features you actually need, optimized for modest hardware like Raspberry Pi, old laptops, or minimal VPS—while keeping many features of commercial products.

Private

100% local. Your conversations never leave your device.

Lightweight

~17MB binary, <128MB RAM usage

Zero-config

30-second Docker setup. No config files needed.

Quick Comparison

| Privacy | Power | Setup | |

|---|---|---|---|

| Commercial (ChatGPT) | ❌ Cloud-only | ✅ High | ✅ Zero-config |

| Typical Self-Host (Open WebUI) | ✅ Local | ✅ High | ❌ Config hell |

| llumen | ✅ Local | ⚖️ Just enough | ✅ Zero-config |

Key Features

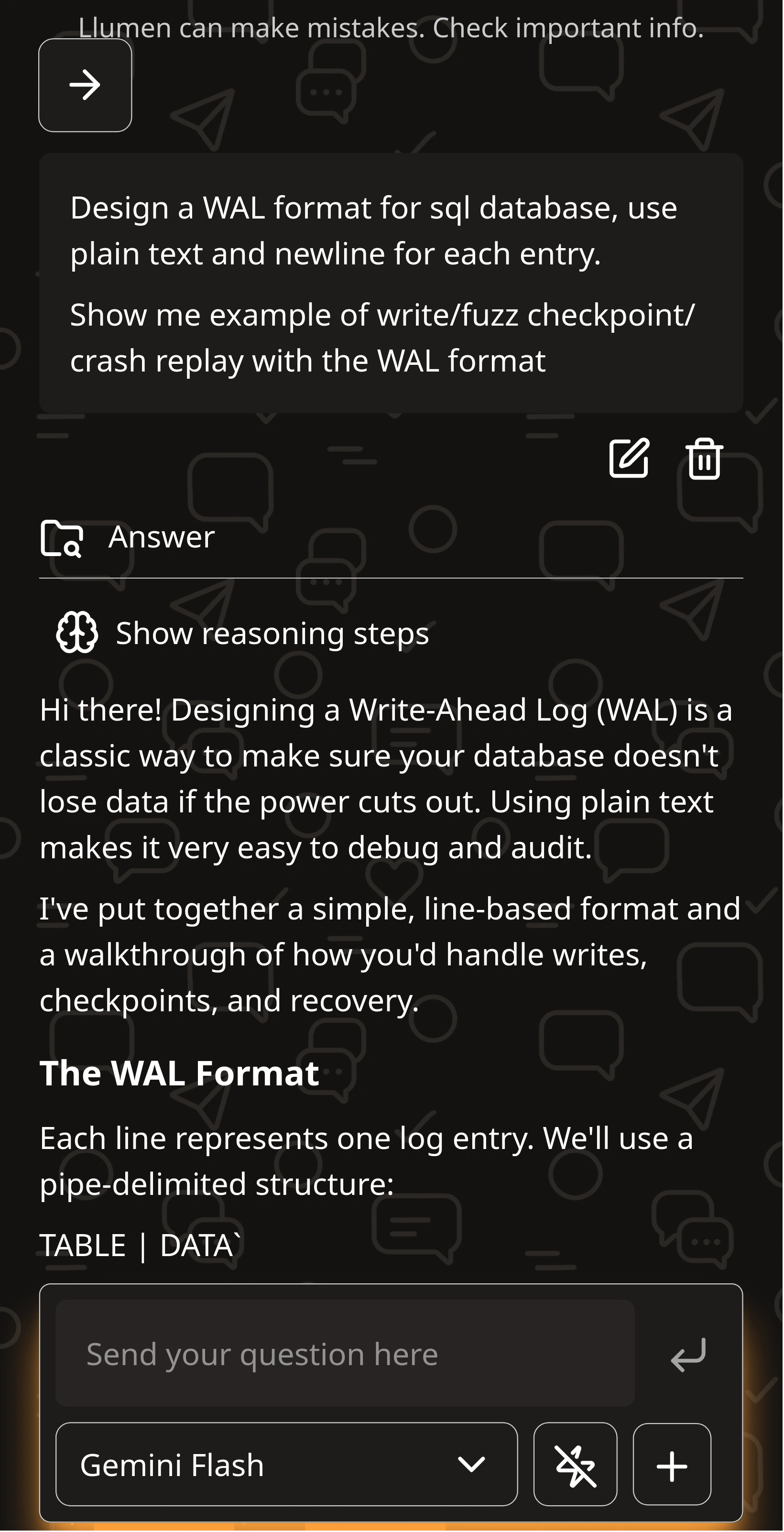

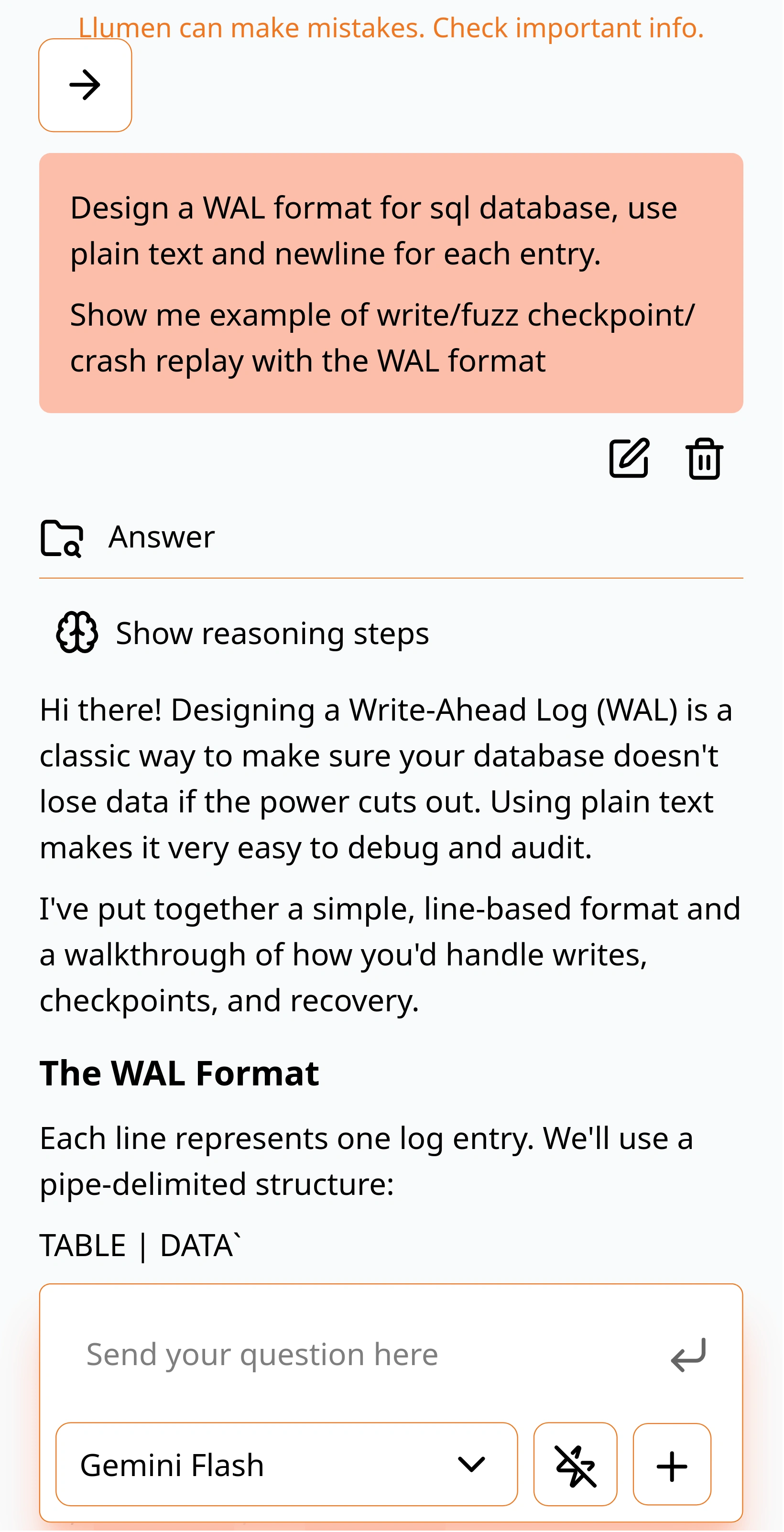

Chat Modes

Normal, Web Search, and Deep Research with autonomous agents

Rich Media

PDF uploads, LaTeX rendering, image generation

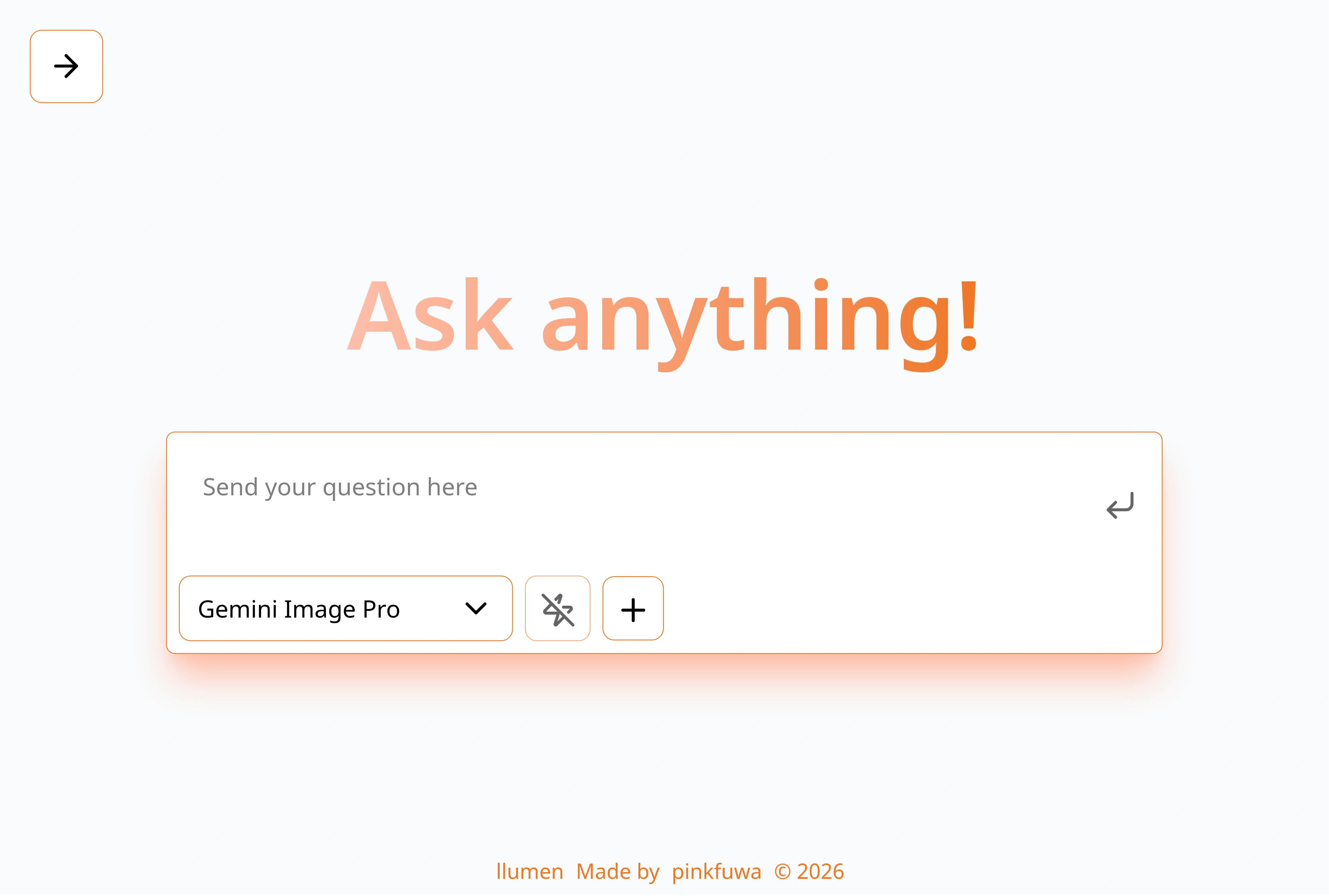

Beautiful Themes

Multiple gorgeous mobile-optimized themes

Lightning Fast

Sub-second cold starts, real-time streaming

Get Started

Install

Get llumen running in 30 seconds

Configure

Simple environment variable setup

First Steps

Learn the basics and start chatting

API Providers

Use OpenRouter, local models, and more

Default Login: admin / P@88w0rd (Remember to change this!)