Features Overview

What Makes Llumen Special

Llumen is designed for users who want privacy and simplicity without sacrificing functionality. Here's what you get:

🚀 Performance First

- Sub-second cold starts: No waiting around

- Real-time token streaming: See responses as they're generated

- Minimal resource usage: Runs on Raspberry Pi and old laptops

- ~17MB binary size: Smaller than most images

- <128MB RAM usage: Leaves resources for other tasks

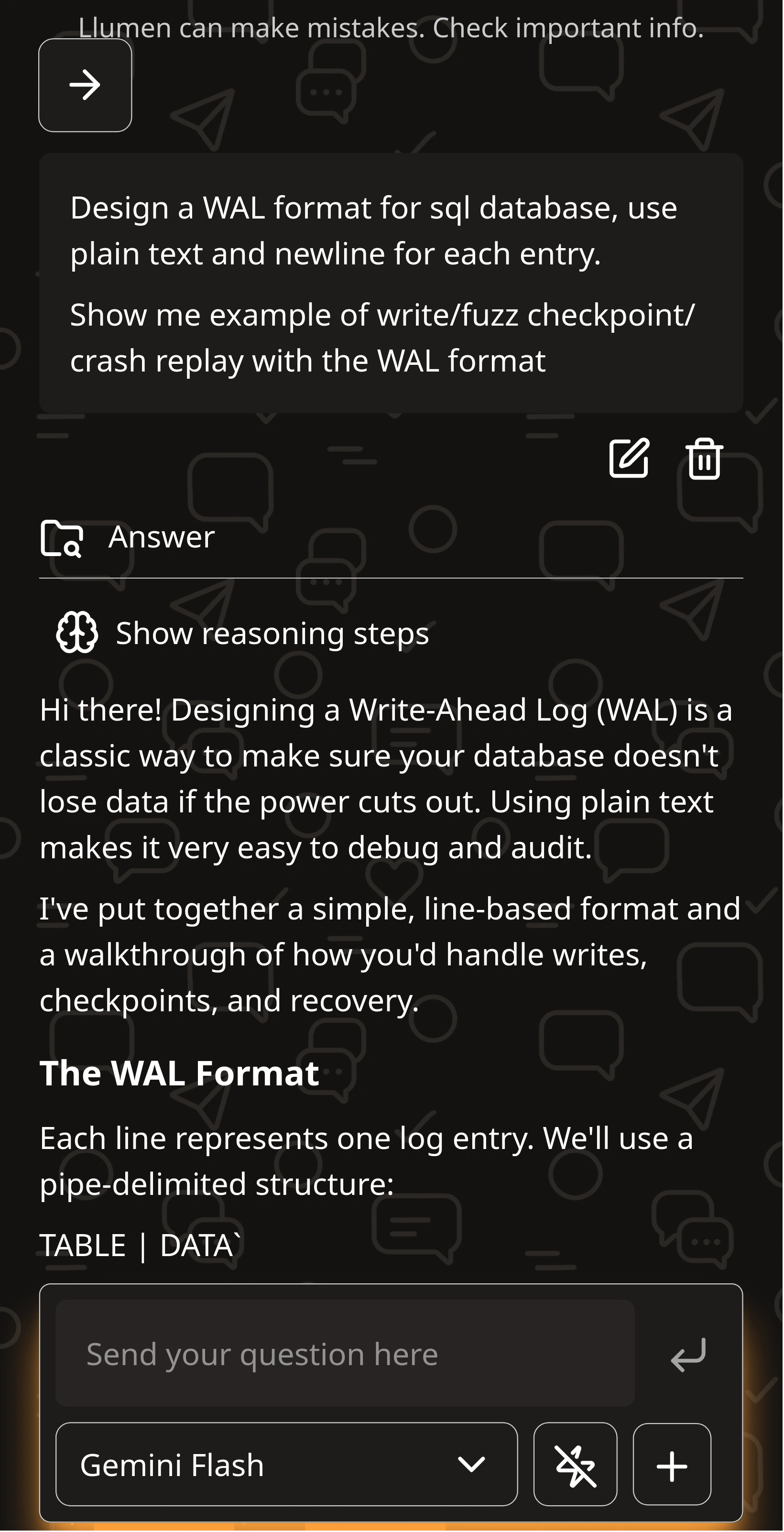

💬 Three Chat Modes

Normal Chat

Standard AI conversation for general queries and tasks

Web Search

Search the web and get AI-powered answers with sources

Deep Research

Autonomous agents that conduct in-depth research

📎 Rich Media Support

- PDF uploads: Chat about your documents

- LaTeX rendering: Perfect for math and scientific content

- Image generation: Create images directly in chat

- Markdown support: Full formatting capabilities

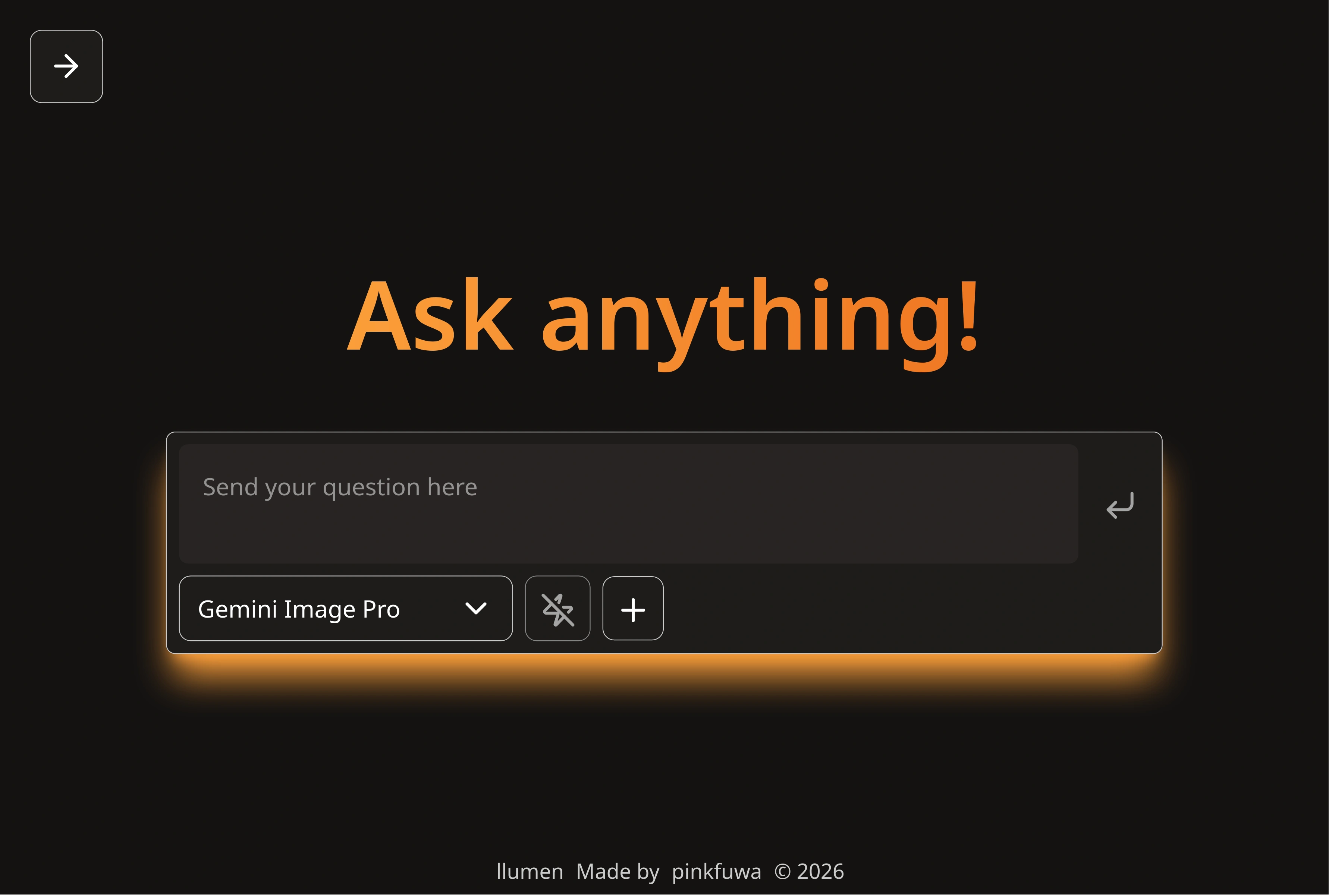

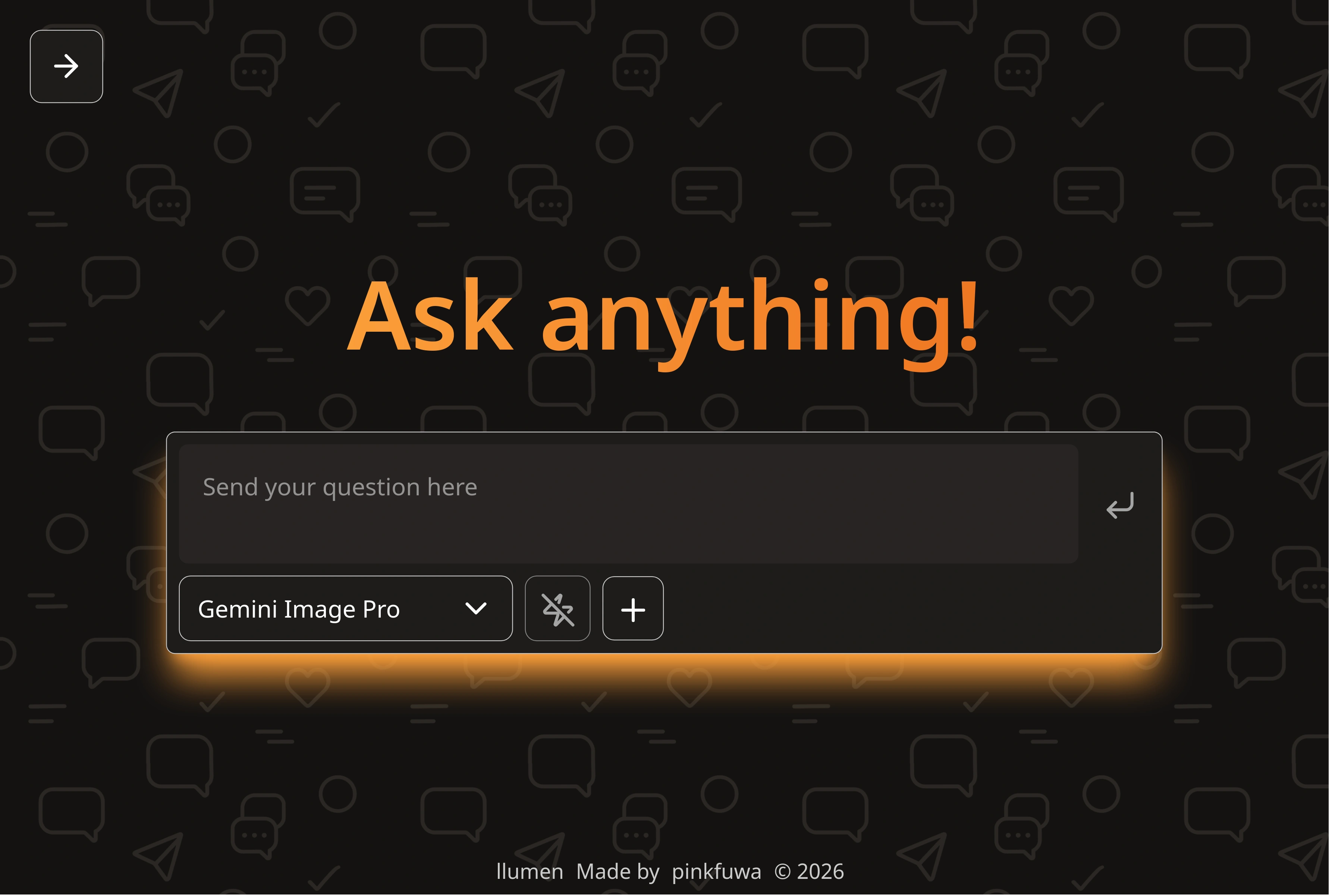

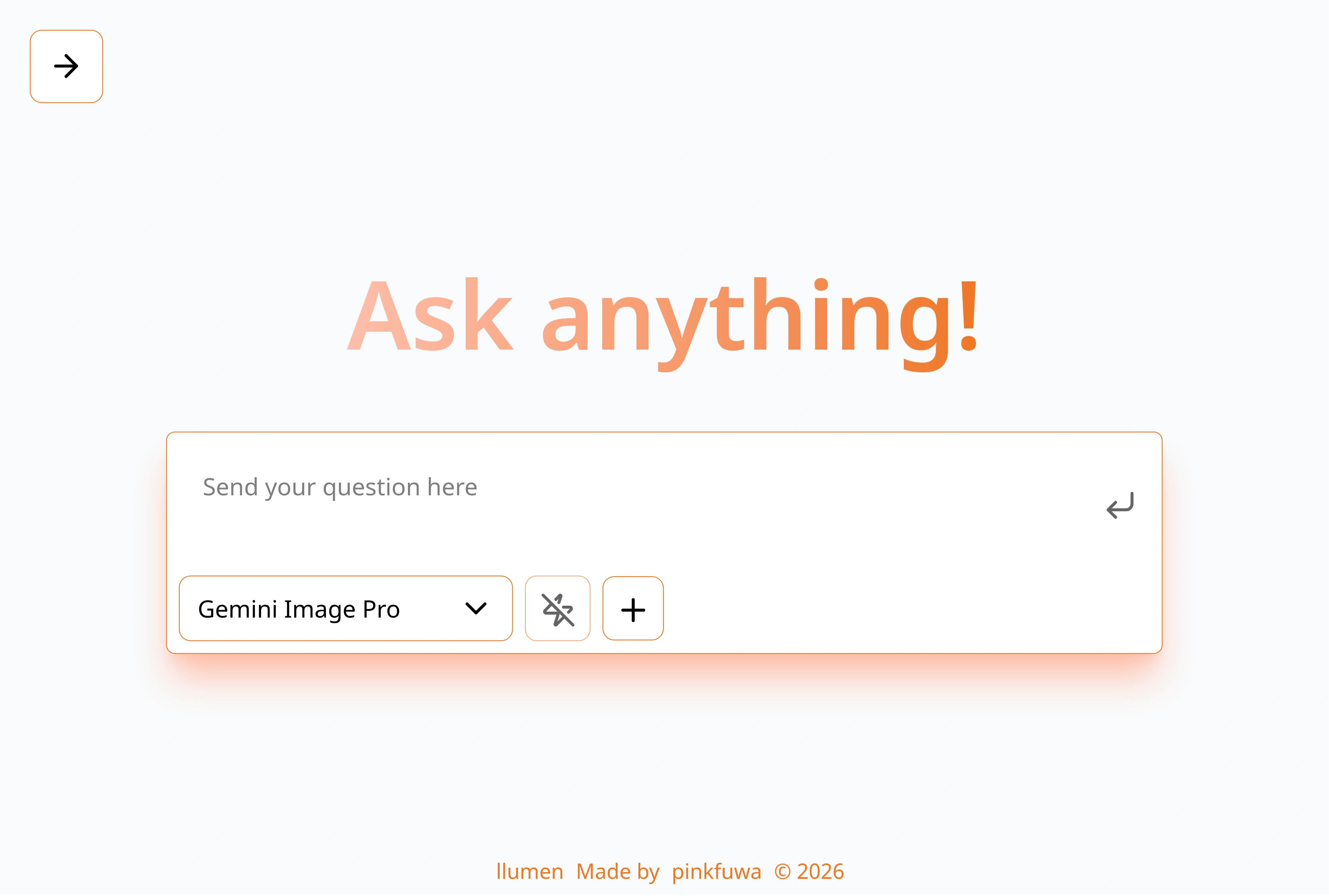

🎨 Beautiful Interface

- Default

- Different Background

- Different Background

- Mobile-optimized: First-class mobile experience

- Multiple themes: Choose from beautiful pre-made themes

- Pattern overlays: Optional visual flair

- Dark mode: Easy on the eyes

🔌 Universal API Support

Works with any OpenAI-compatible API:

- OpenRouter (recommended)

- OpenAI

- Local models (Ollama, LM Studio, etc.)

- Custom endpoints

🔐 Privacy & Security

- Self-hosted: Your data stays on your device

- No telemetry: Zero tracking or analytics

- No external dependencies: Works completely offline

- MPL 2.0 licensed: Open source and transparent

Comparison with Alternatives

| Feature | Llumen | ChatGPT | Open WebUI |

|---|---|---|---|

| Privacy | ✅ Self-hosted | ❌ Cloud | ✅ Self-hosted |

| Setup Time | ⚡ 30 seconds | ⚡ Instant | 🐌 Hours |

| Resource Usage | 🪶 <128MB | ☁️ N/A | 💪 >512MB |

| Web Search | ✅ Built-in | ✅ Built-in | ❌ Manual |

| Deep Research | ✅ Agents | ❌ Powerful | ❌ No |

| Mobile UX | ✅ Excellent | ✅ Good | ❌ Poor |

| Binary Size | 🪶 17MB | ☁️ N/A | 📦 Container |